Korean Media Reports Samsung Electronics Signs $3 Billion Deal with AMD for Memory Stacks

According to reports from Korean media, Samsung Electronics has entered into a 4.134 trillion Won agreement with AMD to supply 12-high HBM3E stacks. AMD utilizes HBM stacks in its AI and HPC accelerators that are based on its CDNA architecture. This agreement is significant as it provides analysts with insight into the potential volumes of AI GPUs that AMD is preparing to introduce to the market. This deal also suggests that AMD may have secured a favorable price for Samsung's HBM3E 12H stacks, especially considering that NVIDIA primarily uses HBM3E from SK Hynix.

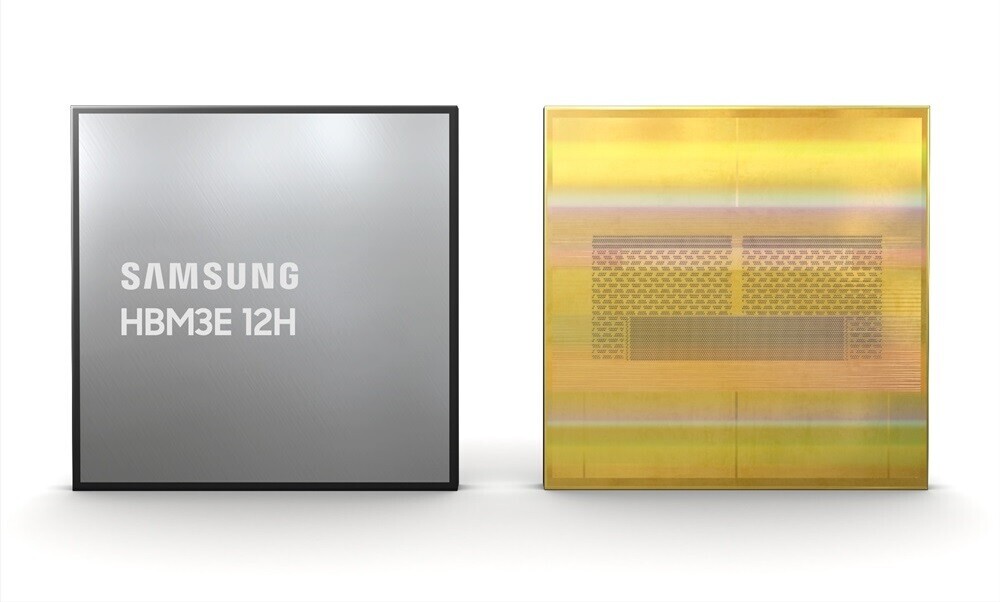

The AI GPU market is expected to become more competitive with the release of NVIDIA's "Hopper" H200 series, AMD's MI350X CDNA3, and Intel's Gaudi 3 generative AI accelerator. Samsung introduced its HBM3E 12H memory in February 2024, featuring 12 layers which is a 50% increase over the previous generation of HBM3E. Each stack offers a density of 36 GB, meaning an AMD CDNA3 chip with 8 stacks would have 288 GB of memory on package. The launch of the MI350X is anticipated in the latter half of 2024, with its standout feature being the refreshed GPU tiles manufactured on the TSMC 4 nm EUV foundry node, making it an ideal platform for the debut of HBM3E 12H.